KAIST develops next-generation ultra-low power LLM accelerator

SEOUL, March 6 (Yonhap) -- A research team at the Korea Advanced Institute of Science and Technology (KAIST) has developed the world's first artificial intelligence (AI) semiconductor capable of processing a large language model (LLM) with ultra-low power consumption, the science ministry said Wednesday.

The team, led by Professor Yoo Hoi-jun at the KAIST PIM Semiconductor Research Center, developed a "Complementary-Transformer" AI chip, which processes GPT-2 with an ultra-low power consumption of 400 milliwatts and a high speed of 0.4 seconds, according to the Ministry of Science and ICT.

The 4.5-mm-square chip, developed by using Korean tech giant Samsung Electronics Co.'s 28 nanometer process, has 625 times less power consumption compared with global AI chip giant Nvidia's A-100 GPU, which requires 250 watts of power to process LLMs, the ministry explained.

The chip is also 41 times smaller in area than the Nvidia model, enabling it to be used on devices like mobile phones, thus, better protecting users' privacy.

The KAIST team has succeeded in demonstrating various language processing tasks with its LLM accelerator on Samsung's latest smartphone model, Galaxy S24, which is the world's first smartphone model with on-device AI, featuring real-time translation for phone calls and improved camera performance, Kim Sang-yeob, a researcher on the team, told reporters in a press briefing.

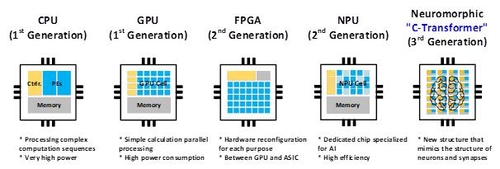

The ministry said the utilization of neuromorphic computing technology, which functions like a human brain, specifically spiking neural networks (SNNs), is essential to the achievement.

Previously, the technology was less accurate than deep neural networks (DNNs) and mainly capable of simple image classifications, but the research team succeeded in improving the accuracy of the technology to match that of DNNs to apply it to LLMs.

The team said its new AI chip optimizes computational energy consumption while maintaining accuracy by using unique neural network architecture that fuses DNNs and SNNs, and effectively compresses the large parameters of LLMs.

"Neuromorphic computing is a technology global tech giants, like IBM and Intel, failed to realize. We believe we are the first to run an LLM with a ultra-low power neuromorphic accelerator," Yoo said.

nyway@yna.co.kr

(END)